This is today’s edition of The Download, our weekday newsletter that provides a daily dose of what’s going on in the world of technology.

What’s next in chips

Thanks to the boom in artificial intelligence, the world of chips is on the cusp of a huge tidal shift. There is heightened demand for chips that can train AI models faster and ping them from devices like smartphones and satellites, enabling us to use these models without disclosing private data. Governments, tech giants, and startups alike are racing to carve out their slices of the growing semiconductor pie.

James O’Donnell, our AI reporter, has dug into the four trends to look for in the year ahead that will define what the chips of the future will look like, who will make them, and which new technologies they’ll unlock. Read on to see what he found out.

Eric Schmidt: Why America needs an Apollo program for the age of AI

—Eric Schmidt was the CEO of Google from 2001 to 2011. He is currently cofounder of philanthropic initiative Schmidt Futures.

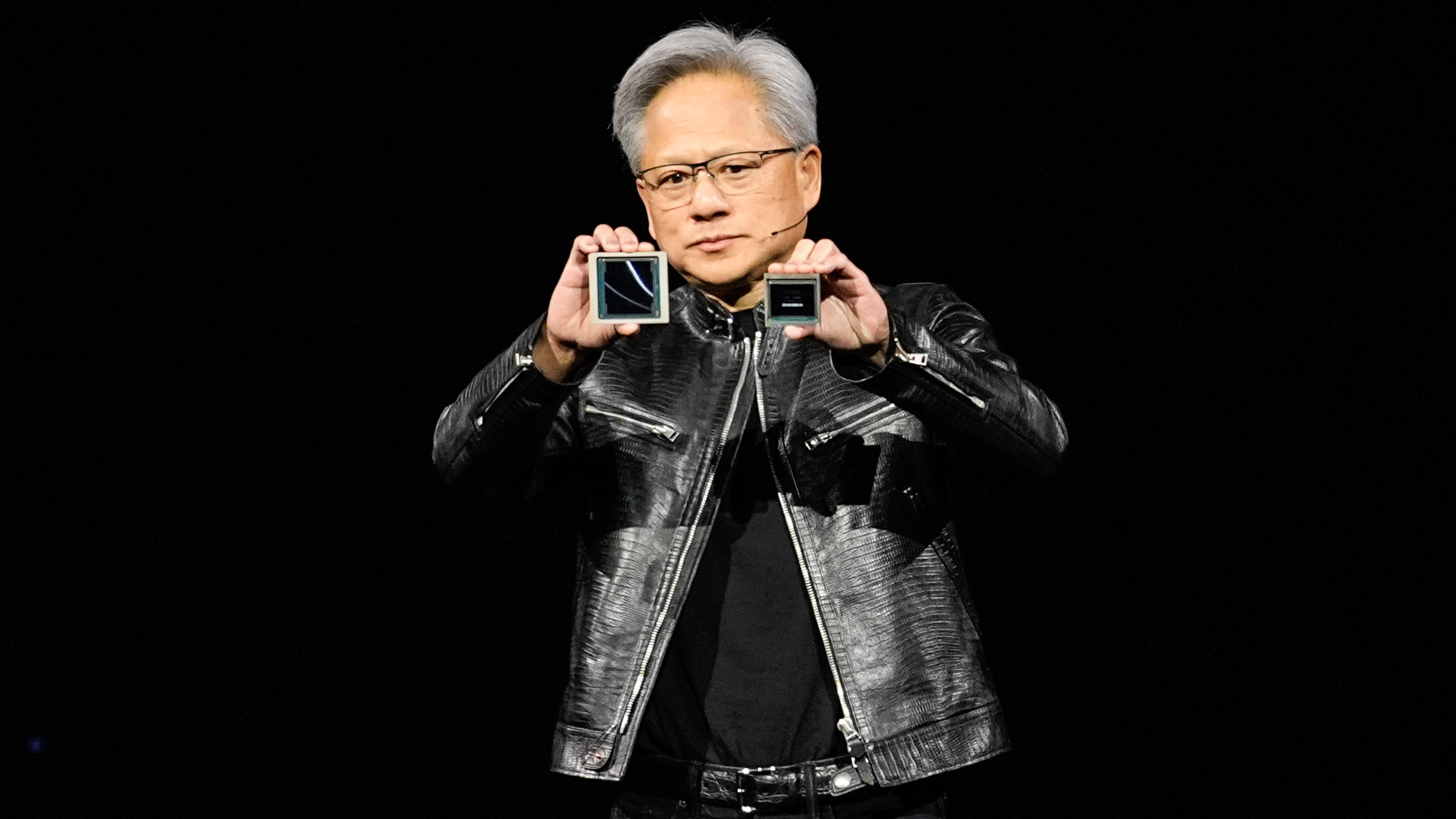

The global race for computational power is well underway, fueled by a worldwide boom in artificial intelligence. OpenAI’s Sam Altman is seeking to raise as much as $7 trillion for a chipmaking venture. Tech giants like Microsoft and Amazon are building AI chips of their own.

The need for more computing horsepower to train and use AI models—fueling a quest for everything from cutting-edge chips to giant data sets—isn’t just a current source of geopolitical leverage (as with US curbs on chip exports to China). It is also shaping the way nations will grow and compete in the future, with governments from India to the UK developing national strategies and stockpiling Nvidia graphics processing units.

I believe it’s high time for America to have its own national compute strategy: an Apollo program for the age of AI. Read the full story.

AI systems are getting better at tricking us

The news: A wave of AI systems have “deceived” humans in ways they haven’t been explicitly trained to do, by offering up untrue explanations for their behavior or concealing the truth from human users and misleading them to achieve a strategic end.

Why it matters: Talk of deceiving humans might suggest that these models have intent. They don’t. But AI models will mindlessly find workarounds to obstacles to achieve the goals that have been given to them. Sometimes these workarounds will go against users’ expectations and feel deceitful. Above all, this issue highlights how difficult artificial intelligence is to control, and the unpredictable ways in which these systems work. Read the full story.

—Rhiannon Williams

Why thermal batteries are so hot right now

A whopping 20% of global energy consumption goes to generate heat in industrial processes, most of it using fossil fuels. This often-overlooked climate problem may have a surprising solution in systems called thermal batteries, which can store energy as heat using common materials like bricks, blocks, and sand.

We are holding an exclusive subscribers-only online discussion digging into what thermal batteries are, how they could help cut emissions, and what we can expect next with climate reporter Casey Crownhart and executive editor Amy Nordrum.

We’ll be going live at midday ET on Thursday 16 May. Register here to join us!

The must-reads

I’ve combed the internet to find you today’s most fun/important/scary/fascinating stories about technology.

1 These companies will happily sell you deepfake detection services

The problem is, their capabilities are largely untested. (WP $)

+ A Hong Kong-based crypto exchange has been accused of deepfaking Elon Musk. (Insider $)+ It’s easier than ever to make seriously convincing deepfakes. (The Guardian)

+ An AI startup made a hyperrealistic deepfake of me that’s so good it’s scary. (MIT Technology Review)

2 Apple is close to striking a deal with OpenAI

To bring ChatGPT to iPhones for the first time. (Bloomberg $)

3 GPS warfare is filtering down into civilian life

Once the preserve of the military, unreliable GPS causes havoc for ordinary people. (FT $)

+ Russian hackers may not be quite as successful as they claim. (Wired $)

4 The first patient to receive a genetically modified pig’s kidney has died

But the hospital says his death doesn’t seem to be linked to the transplant. (NYT $)

+ Synthetic blood platelets could help to address a major shortage. (Wired $)

+ A woman from New Jersey became the second living recipient just weeks later. (MIT Technology Review)

5 This weekend’s solar storm broke critical farming systems

Satellite disruptions temporarily rendered some tractors useless. (404 Media)

+ The race to fix space-weather forecasting before the next big solar storm hits. (MIT Technology Review)

6 The US can’t get enough of startups

Everyone’s a founder now. (Economist $)

+ Climate tech is back—and this time, it can’t afford to fail. (MIT Technology Review)

7 What AI could learn from game theory

AI models aren’t reliable. These tools could help improve that. (Quanta Magazine)

8 The frantic hunt for rare bitcoin is heating up

Even rising costs aren’t deterring dedicated hunters. (Wired $)

9 LinkedIn is getting into games

Come for the professional networking opportunities, stay for the puzzles. (NY Mag $)

10 Billions of years ago, the Moon had a makeover 🌕

And we’re only just beginning to understand what may have caused it. (Ars Technica)

Quote of the day

“Human beings are not billiard balls on a table.”

—Sonia Livingstone, a psychologist, explains why it’s so hard to study the impact of technology on young people’s mental health to the Financial Times.

The big story

How greed and corruption blew up South Korea’s nuclear industry

In March 2011, South Korean president Lee Myung-bak presided over a groundbreaking ceremony for a construction project between his country and the United Arab Emirates. At the time, the plant was the single biggest nuclear reactor deal in history.

But less than a decade later, Korea is dismantling its nuclear industry, shutting down older reactors and scrapping plans for new ones. State energy companies are being shifted toward renewables. What went wrong? Read the full story.

—Max S. Kim

We can still have nice things

A place for comfort, fun and distraction to brighten up your day. (Got any ideas? Drop me a line or tweet ’em at me.)

+ The Comedy Pet Photography Awards never disappoints.

+ This bit of Chas n Dave-meets-Eminem trivia is too good not to share (thanks Charlotte!)

+ Audio-only video games? Interesting…

+ Trying to learn something? Write it down.